In a previous post, we showed how to interact with S3 using AWS CLI. In this post, we will provide a brief introduction to boto3 and especially how we can interact with the S3.

Download and Configure Boto3

You can download the Boto3 packages with pip install:

$ python -m pip install boto3

or through Anaconda:

conda install -c anaconda boto3

Then, it is better to configure it as follows:

For the credentials which are under ~/.aws/credentials :

[default] aws_access_key_id = YOUR_KEY aws_secret_access_key = YOUR_SECRET

And for the region, you can with the file which is under ~/.aws/config :

[default] region=us-east-1

Once you are ready you can create your client:

import boto3

s3 = boto3.client('s3')

Notice, that in many cases and in many examples you can see the boto3.resource instead of boto3.client. There are small differences and I will use the answer I found in StackOverflow

Client:

- low-level AWS service access

- generated from AWS service description

- exposes botocore client to the developer

- typically maps 1:1 with the AWS service API

- all AWS service operations are supported by clients

- snake-cased method names (e.g. ListBuckets API => list_buckets method)

Resource:

- higher-level, object-oriented API

- generated from resource description

- uses identifiers and attributes

- has actions (operations on resources)

- exposes subresources and collections of AWS resources

- does not provide 100% API coverage of AWS services

How to List your Buckets

Assume that you have already created some S3 buckets, you can list them as follow:

list_buckets = s3.list_buckets()

for bucket in list_buckets['Buckets']:

print(bucket['Name'])

gpipis-cats-and-dogs

gpipis-test-bucket

my-petsdataHow to Create a New Bucket

Let’s say that we want to create a new bucket in S3. Let’s call it 20201920-boto3-tutorial.

s3.create_bucket(Bucket='20201920-boto3-tutorial')

Let’s see if the bucket is actually on S3

for bucket in s3.list_buckets()['Buckets']:

print(bucket['Name'])

20201920-boto3-tutorial

gpipis-cats-and-dogs

gpipis-test-bucket

my-petsdataAs we can see, the 20201920-boto3-tutorial bucket added.

How to Delete an Empty Bucket

We can simply delete an empty bucket:

s3.delete_bucket(Bucket='my_bucket')

If you want to delete multiple empty buckets, you can write the following loop:

list_of_buckets_i_want_to_delete = ['my_bucket01', 'my_bucket02', 'my_bucket03']

for bucket in s3.list_buckets()['Buckets']:

if bucket['Name'] in list_of_buckets_i_want_to_delete:

s3.delete_bucket(Bucket=bucket['Name'])

Bucket vs Object

A bucket has a unique name in all of S3 and it may contain many objects which are like the “files”. The name of the object is the full path from the bucket root, and any object has a key which is unique in the bucket.

Upload files to S3

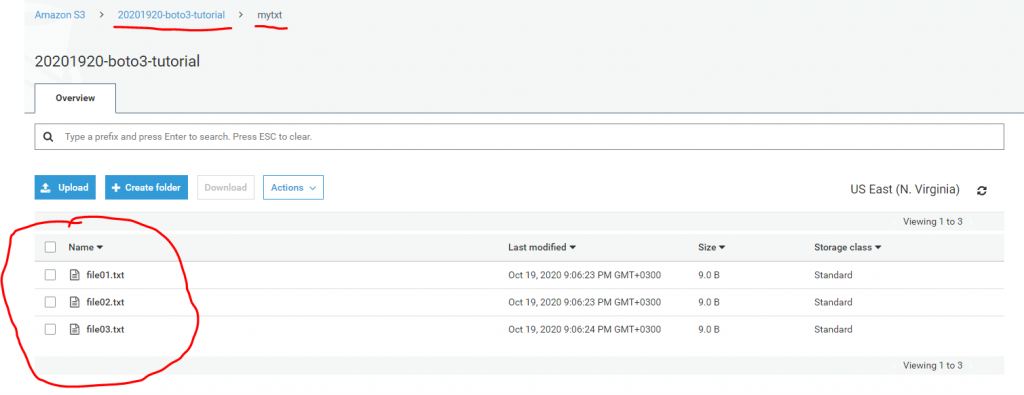

I have 3 txt files and I will upload them to my bucket under a key called mytxt.

s3.upload_file(Bucket='20201920-boto3-tutorial',

# Set filename and key

Filename='file01.txt',

Key='mytxt/file01.txt')

s3.upload_file(Bucket='20201920-boto3-tutorial',

# Set filename and key

Filename='file02.txt',

Key='mytxt/file02.txt')

s3.upload_file(Bucket='20201920-boto3-tutorial',

# Set filename and key

Filename='file03.txt',

Key='mytxt/file03.txt')

As we can see, the three txt files were uploaded to the 20201920-boto3-tutorial under the mytxt key

Notice: The files that we upload to S3 are private by default. If we want to make them public then we need to add the ExtraArgs = { 'ACL': 'public-read'}). For example:

s3.upload_file(Bucket='20201920-boto3-tutorial',

# Set filename and key

Filename='file03.txt',

Key='mytxt/file03.txt',

ExtraArgs = { 'ACL': 'public-read'}

)

List the Objects

We can list the objects as follow:

for obj in s3.list_objects(Bucket='20201920-boto3-tutorial', Prefix='mytxt/')['Contents']:

print(obj['Key'])

Output:

mytxt/file01.txt

mytxt/file02.txt

mytxt/file03.txtDelete the Objects

Let’s assume that I want to delete all the objects in ‘20201920-boto3-tutorial’ bucket under the ‘mytxt’ Key. We can delete them as follows:

for obj in s3.list_objects(Bucket='20201920-boto3-tutorial', Prefix='mytxt/')['Contents']:

s3.delete_object(Bucket='20201920-boto3-tutorial', Key=obj['Key'])

How to Download an Object

Let’s assume that we want to download the dataset.csv file which is under the mycsvfiles Key in MyBucketName. We can download the existing object (i.e. file) as follows:

s3.download_file(Filename='my_csv_file.csv', Bucket='MyBucketName', Key='mycsvfiles/dataset.csv')

How to Get an Object

Instead of downloading an object, you can read it directly. For example, it is quite common to deal with the csv files and you want to read them as pandas DataFrames. Let’s see how we can get the file01.txt which is under the mytxt key.

obj = s3.get_object(Bucket='20201920-boto3-tutorial', Key='mytxt/file01.txt')

obj['Body'].read().decode('utf-8')

Output:

'This is the content of the file01.txt'How to Upload an Object from Memory to S3

We can also upload an object to S3 after we read it in binary mode.

target_bucket = 'my-bucket'

target_file = 'test.csv'

s3 = boto3.client('s3')

with open('test.csv', "rb") as f:

s3.upload_fileobj(f, target_bucket, target_file)

Functions to Upload and Download Objects To and From S3

We will provide two functions for writing and downloading data To and From S3 respectively. Notice that here we use the resource('s3').

# http://boto3.readthedocs.io/en/latest/guide/s3.html

def write_to_s3(filename, bucket, key):

with open(filename,'rb') as f: # Read in binary mode

return boto3.Session().resource('s3').Bucket(bucket).Object(key).upload_fileobj(f)

# http://boto3.readthedocs.io/en/latest/guide/s3.html

def download_from_s3(filename, bucket, key):

with open(filename,'wb') as f:

return boto3.Session().resource('s3').Bucket(bucket).Object(key).download_fileobj(f)

How to Copy S3 Object from One Bucket to Another

If we want to copy a file from one s3 bucket to another.

import boto3

s3 = boto3.resource('s3')

copy_source = {

'Bucket': 'mybucket',

'Key': 'mykey'

}

bucket = s3.Bucket('otherbucket')

bucket.copy(copy_source, 'otherkey')

Or

import boto3

s3 = boto3.resource('s3')

copy_source = {

'Bucket': 'mybucket',

'Key': 'mykey'

}

s3.meta.client.copy(copy_source, 'otherbucket', 'otherkey')

Discussion

That was a brief introduction to Boto3. Actually, with the Boto3 you can have almost full control of the platform.