We have provided an example of How to Query S3 Objects With S3 Select via console. In this post, we will show you how you can filter large data files using the S3 Select via the Boto3 SDK.

Scenario

Assume that we have a large file (can be csv, txt, gzip, json etc) stored in S3, and we want to filter it based on some criteria. For example, we want to get specific rows or/and specific columns. Let’s see how we can do it with S3 Select using Boto3.

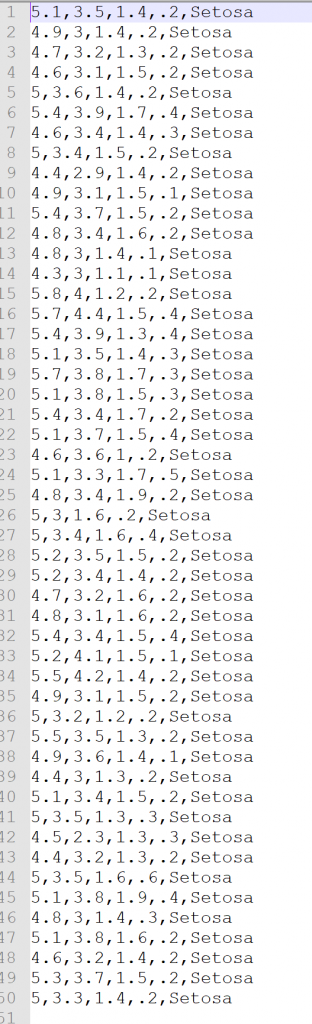

We will work with the iris.csv file which is in gpipis-iris-dataset bucket. Our goal is to get only the rows of “Setosa” variety. Let’s get our hands dirty. We will work with the “select_object_content” method of Boto3.

import boto3

import pandas as pd

client = boto3.client('s3')

resp = client.select_object_content(

Bucket = 'gpipis-iris-dataset',

Key = 'iris.csv',

Expression = """select * from S3Object s where s.variety='Setosa'""",

ExpressionType = 'SQL',

InputSerialization = {'CSV': {'FileHeaderInfo': 'Use'}},

OutputSerialization = {'CSV': {}}

)

# create an empty file

f = open("myfile.txt","w")

f.close()

# read each record and append it to "myfile"

for event in resp['Payload']:

if 'Records' in event:

tmp = event['Records']['Payload'].decode()

file1 = open("myfile.txt","a")

file1.write(tmp)

print(event['Records']['Payload'].decode())

file1.close()

# read the "myfile.txt" with pandas in order to confirm that it works as expected

df = pd.read_csv("myfile.txt", header=None)

print(df)

As we can see, we generated the “myfile.txt” which contains the filtered iris dataset.